SplitMetrics A/B Testing & Validation Framework to Win on the App Store

Gabriel Kuriata

Gabriel Kuriata  Gabriel Kuriata

Gabriel Kuriata Each successful design is preceded by many failed ones. All great apps had poor and underperforming iterations before they became big. Here’s the thing: the path to success is shorter, when you kill those bad iterations and designs early. Possibly, even before the app is released. How?

A/B testing of mobile apps & validation of ideas, concepts and strategies is a process that puts failures under your direct control, allowing you to kill or abandon them with minimal cost. At the same time, it allows you to capitalize on success quickly. It’s vital for app development and growth during all stages of their life.

A/B testing of mobile apps and validation can be incredibly fun, sometimes bringing unexpected results. It isn’t difficult. SplitMetrics Optimize, our A/B testing platform for app product pages on mobile app stores gives app publishers all the tools to own the process.

This article elaborates on the official A/B testing & validation framework developed by SplitMetrics to use our platform to its fullest potential.

Simply put, testing & validation is crucial and this is how we do it.

Scale determines how hard a failure hits. Today, with millions of apps on the market and thousands of new ones joining the crowd every day, any form of success requires significant investment. Not only in development but also in marketing. With marketing budgets for casual games frequently being in the seven figure a month category, a failure hits their publishers like a freight train.

Good apps with great features aren’t impervious to failure. Great apps can underperform because of their product pages. Customer expectations change. Entire genres come, go and return from beyond to app stores. Jumping on the gravy train is much more difficult than it sounds. Are match-3 games all the rage now, or are they being replaced by match-4 games now? Sometimes it’s difficult to tell.

With a great scale of operations comes great responsibility. For big projects testing is ingrained in the development process and ubiquitous among successful mobile app developers. Many factors contribute to the app’s potential for profit – or loss and testing provides considerable control over many of them. It can directly influence installation, drop off and engagement rates. It also has an implicit impact on lifetime value, revenue and in-app purchases. For smaller projects testing can also be a life-saver as strained budgets leave less room for error. The most important point is: A/B testing can be outstandingly beneficial to each and all developers and publishers, but it’s a case by case analysis. A good framework and experience helps to maximize our return on this investment. This is why we’re always ready to help and assist in many projects.

So, if the investment in testing your app looks sensible to you, let’s get down to the subject matter.

Our official testing and validation framework consists of eight steps. It doesn’t matter what stage of development your app is, the framework remains the same:

We ruthlessly follow this framework for apps in all stages of development, simply shifting our focus to elements that matter during them. The focus of SplitMetrics Optimize and what we do in the Agency is testing & validation of mobile app product pages on app stores. Given that, our framework serves different purposes depending on the stage of development an app is in:

To answer a frequently asked question in advance: yes, it’s possible to conduct meaningful experiments and tests even when your app is nothing more than some ideas on paper (or rather Photoshop concept screens). We have the tools in SplitMetrics Optimize to test that and they’re used more frequently than you might think!

Anyway, in this article we give examples of successful validations for apps in all mentioned stages of development, so let’s get right into it.

Your app will have to beat thousands of other apps to… win a couple of seconds of attention from a user. In that time you’ll have to convince them that your app is worth installing. The research step is a solid foundation to answering the question of “How to hell do we do that?”, provided is consists of these elements:

But how do you actually start your research? The very first step in this process is… checking if someone hasn’t done it already.

Don’t waste your precious time & resources reinventing the wheel. There’s plenty of reference available, as well as reports, benchmarks and so on. You can easily fulfill the goals of this stage with good, desktop research. Check out these reports SplitMetrics published:

ASO Benchmarks and Mobile Trends Report

Lifestyle Apps: ASO and UA Trends

They can serve as a good reference for what the end result of this step might look like. Also, don’t hesitate to check work done by our partners. Mobile marketers are a friendly bunch and share their knowledge and market insights regularly. Check out blogs of RevenueCat, Moburst, Phiture, AppRadar, Mobile Groove and many others.

Otherwise, this stage can be time consuming (but at the same time incredibly fun). You want to do this (or have to) phase all by yourself? Here are is a checklist for each part of the research phase:

This might be the most difficult part for an individual publisher, as a key component here are industry benchmarks that are a reflection of the current state of the market. Average values of tap-through rates, conversion rates and other metrics are difficult to acquire without a large portfolio of clients (that an agency or a company like ours might have) and a history of test & experiments, so most probably you’ll just HAVE to rely on external sources to evaluate the feasibility of creating an app for a particular category and audience.

Another key component are keywords. These directly reflect functionality of apps and intent of users. It’s difficult to evaluate their competitiveness and popularity without proper tools, although in theory the Apple App Store and Google Play store offer what’s necessary to accomplish this task.

Another area to examine is the physical number of similar apps on the app store. So… to answer the question of “How many match-3 games are out there exactly?”… someone has to count them. It truly matters for the next question: “How do we add another one that is successful?”. You won’t know that without…

You should conduct competitor analysis on two levels: macro and individual apps. Take a population of apps most similar to yours and try to distinguish any trends & patterns in design and messaging. Take a sample of the most successful apps and study them individually.

This part will help you formulate your value proposition during the Ideation phase. Either offer something new (a unique feature), perfect what’s already out there (better performance)… or find yet another way.

This article on ASO competitive research may be useful to understand this step better.

Audience analysis: this part may require some investment in probing ad campaigns, to verify responsiveness to ad groups organized by key demographic metrics. You might complete this step later, because discovering your audience may be the result of using our testing framework. This is exactly what our client Etermax did with their quiz app.

You can also make assumptions based on market research and competitor analysis, as it’s possible to deduce through creatives who apps most similar to your are targeting.

This is what your should have after completing this step:

With these at hand, you’re ready for the next step.

In this phase, you collect ideas for all the things that you’ll want to test. These can be key features (highlighted through app store creatives), artistic direction, screenshot types, icons. What’s on the table depends on the development stage you’re in.

We would like to start with big, different concepts at first – to really understand what is the core motivation of the users. What is the reason for them to download the app, which USP would speak to the majority of the audience? Once we know the core motivation and we find a winner – we keep testing and optimizing in a fine tuning process.

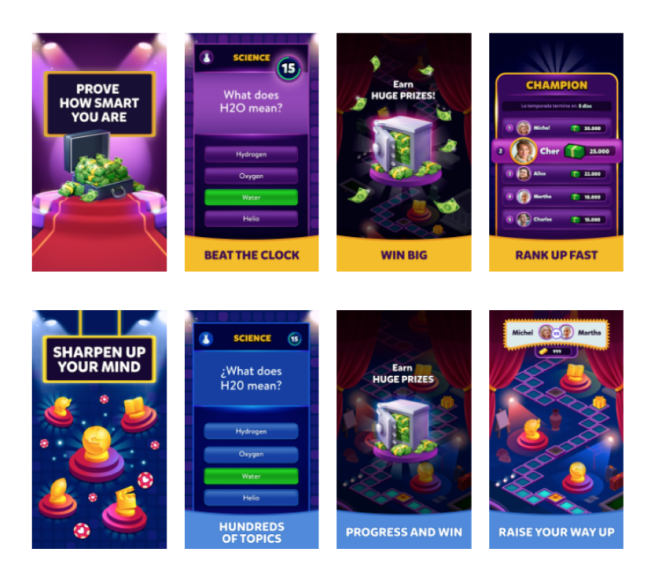

To illustrate this step on a real example, here’s a case of Etermax. After the success of Trivia Crack, Etermax had a new Trivia game in development, targeted at a more senior audience. What value can the app bring to its intended audience?

We had a new Trivia game in development, aimed at a more senior audience. We wanted to know before the release of the game what type of users we were targeting. With the product team, we set up 2 user archetypes.

The two archetypes were:

Both ideated archetypes are valid and have potential. However, given their mutual exclusion and overall impact on messaging present on the app’s product page on the App Store, as well as in-game design, there was only room for one.

In this stage, you use your ideas to form hypotheses that can be verified and tested. What is a hypothesis? Well, essentially – it’s an educated guess that discusses variables that can be verified. How you formulate your hypothesis determines the design and execution of your validation later.

Let’s get back to our Etermax case. We have two big ideas:

The hypothesis: senior audience might prefer a more casual game that promises self-improvement and sharpening of their skills and intellect.

It’s not uncommon to see A/B tests run this early in the development cycle. Read the full Etermax case study to see how pre-launch testing can work. We also recommend this article that explains why it’s actually very beneficial to start testing even before the release.

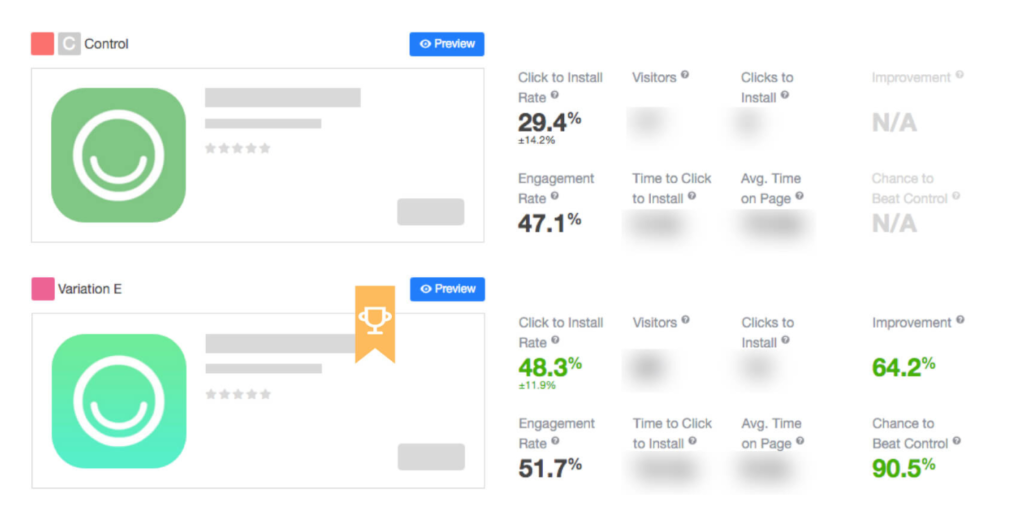

Another example is the case of Hobnob and rebranding that led to decrease in conversions and tap-through rate. Hobnob is the app that helps people to create professional looking event invitations and distribute them via text messages. Which element of the app’s product page may affect those metrics?

The hypothesis: app icon is the only graphic asset that’s shown in both the search results and on the product page. Changing it will improve both tap-through rates and conversion rates.

We recommend a top-down approach in this stage. Your first hypothesis should discuss variables (in our case, product page creatives) with biggest visibility and possible impact on users. The app’s icon, the first screenshot – visuals responsible for the very first impression. You’ll have the opportunity to go deeper in future experiments. The best strategy is to test your changes one by one, to achieve clear and transparent analytics.

Transform your hypotheses into variations for testing. How? Look at the screen below:

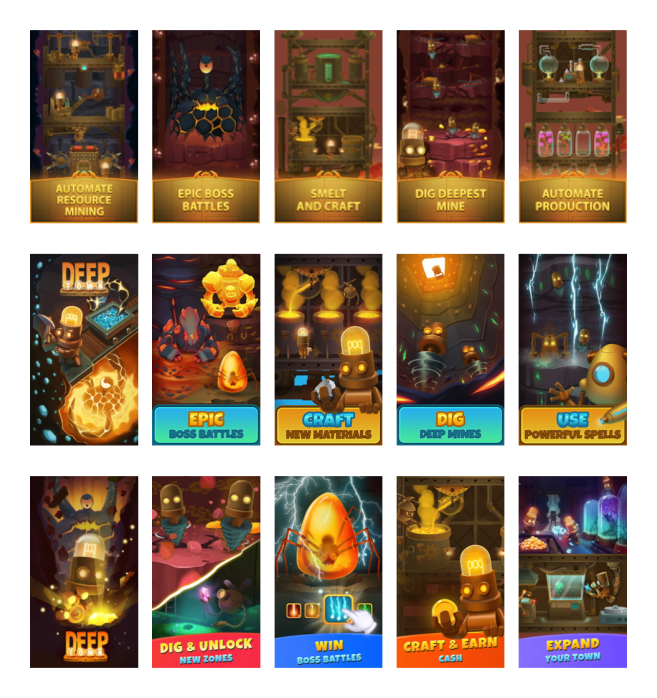

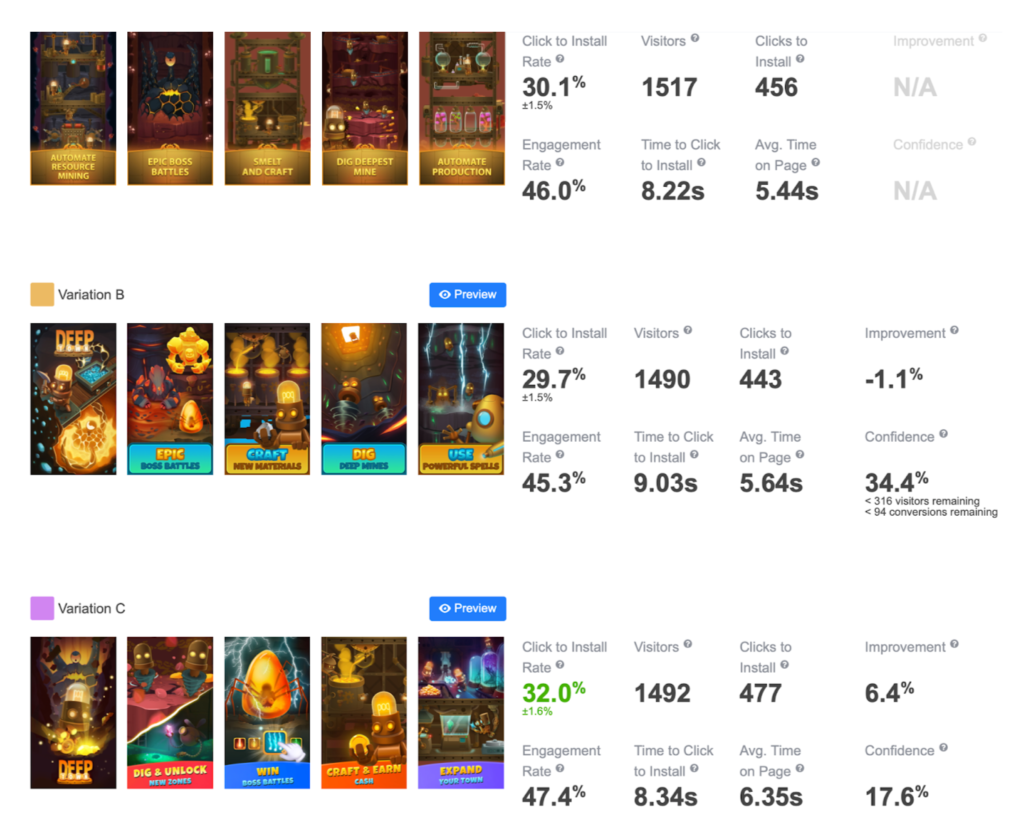

Another fine example of a proper selection of screenshots for testing is the case of Rockbite and Mining Idle Tycoon:

In this step we’re diving into all the nitty gritty details. This is the step when our designs and image sets are sent from the design team and into our SplitMetrics Optimize platform.

Designing & executing an experiments involves these steps:

Remember, compare what’s comparable. Traffic from Facebook campaigns differs in quality from the one acquired through Apple Search Ads. Be prepared that results may be inconclusive, so you may begin another test right away.

All in all, our platform greatly simplifies this step.

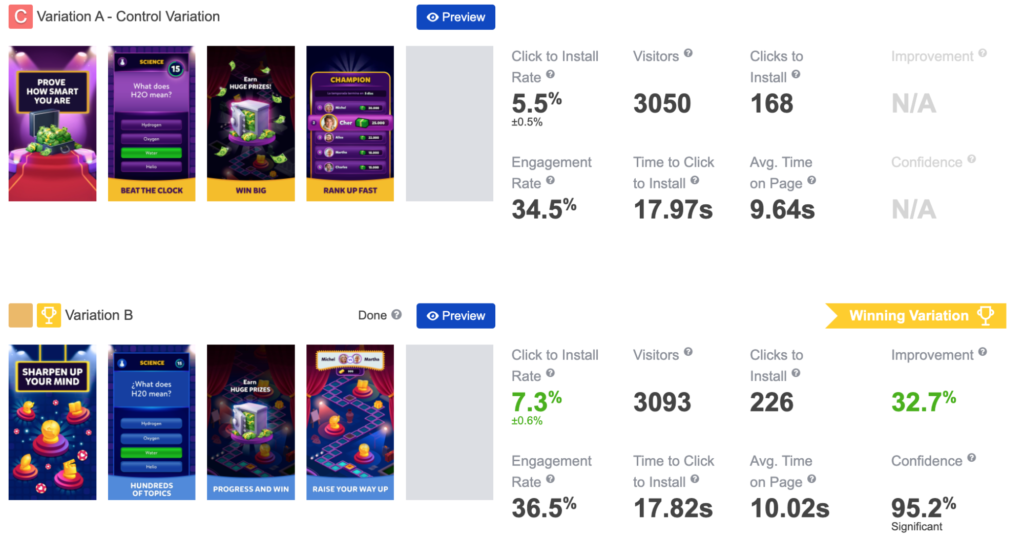

So how did our tests fare? Let’s have a look at a result from SplitMetrics Optimize:

All cases shown above have well planned out tests that resulted in decisive results for winning variations. This isn’t always the case. Basically, we can have 3 possible outcomes for each executed experiment:

Sharing what you know with others may have a big impact not only on your project but others as well. All your research, preparation can be a valuable lesson to other teams. Transparency ensures proper expectations from stakeholders regarding future projects. Internal knowledge base may greatly reduce the amount of work put into any future validation processes.

As soon as you have decisive results – implement and observe changes in metrics. Don’t stop testing, don’t stop growing.

Seasonality also plays a role in maintaining continuity of A/B testing of mobile apps. You can significantly boost your results by accustoming your app store presence to summer holidays, Christmas or any other local festive period. The gains can be so significant that they warrant an earlier preparation.

Trends change, expectations of users evolve and so should your app. As long as the benefits of maintaining an app outweigh the cost of validating & improving it, you should continue to seek out new opportunities for growth.